How Noise Reduction Improves Speech Accuracy

Noise can destroy transcription accuracy. Speech-to-text systems that perform well in quiet environments (95% accuracy) can drop below 70% in noisy settings like busy offices or streets. This happens because background sounds - like HVAC hums, traffic, or overlapping voices - interfere with the audio patterns that these systems rely on.

Modern approaches to noise reduction solve this by training systems on real-world noisy audio, rather than relying on traditional filters that often remove critical speech details. For example, CVS processed 140,000 pharmacy calls per hour with 92% accuracy in noisy conditions by using advanced models trained on diverse audio.

Key takeaways:

- Noise types matter: Stationary (fans), non-stationary (traffic), and competing speech (overlapping voices) affect transcription differently.

- Old methods fall short: Traditional filters can strip away important speech cues.

- New solutions work better: Neural networks trained on noisy data improve accuracy by 7.5%-20%.

- Practical tips: Use raw audio, disable external filters, and test in real-world conditions for the best results.

Noise reduction isn’t just about cleaner audio - it’s about smarter systems that handle complex, noisy environments effectively.

Why Noise Cancellation Is Critical for Accurate AI Voice Bots

How Background Noise Affects Speech Accuracy

How Different Noise Types Affect Speech Recognition Accuracy

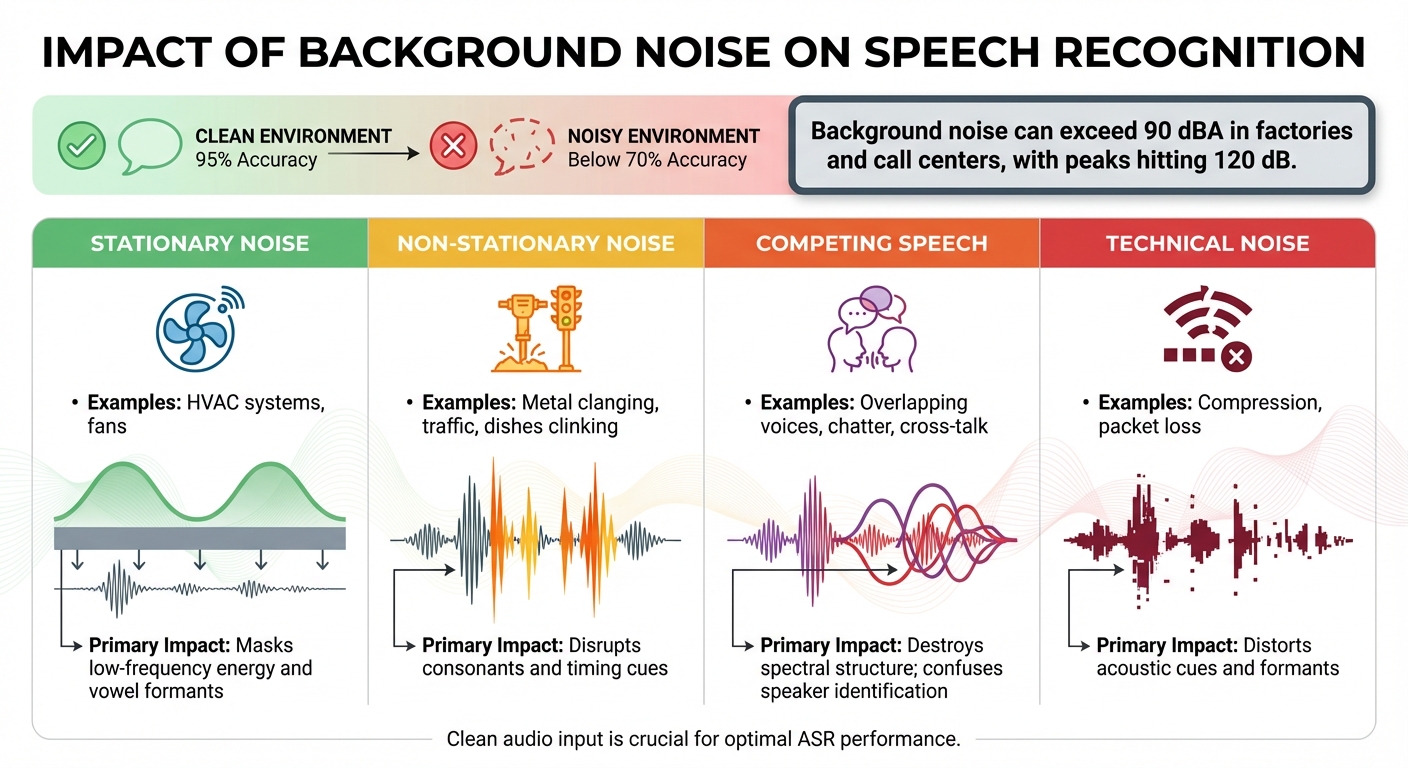

Background noise doesn’t just make audio harder to hear - it actively interferes with the acoustic patterns that speech recognition systems rely on. Speech and noise often share the same frequency ranges, which means traditional algorithms can inadvertently strip away critical speech details while trying to filter out the noise. Let’s explore how different types of background noise uniquely disrupt transcription accuracy.

In high-noise environments like factories or bustling call centers, background noise levels can exceed 90 dBA, with peaks hitting 120 dB. In such conditions, systems that typically achieve 95% accuracy in clean audio scenarios may see their performance drop below 70%.

Types of Background Noise and Their Impact

Not all noise affects transcription accuracy in the same way. Here’s how various noise types impair speech recognition:

- Stationary noise: Constant sounds like HVAC systems or fans mask low-frequency energy and vowel formants, making it harder for systems to process speech.

- Non-stationary noise: Irregular sounds such as metal clanging, traffic, or dishes clinking disrupt consonants and timing cues, which are vital for accurate transcription.

- Competing speech: Overlapping voices, common in open offices or contact centers, compromise the spectral structure required for transcription. This overlap confuses speaker identification and muddles the output.

For example, in July 2025, a healthcare startup handling patient calls near ventilators saw generic ASR APIs achieve only 60% accuracy. By switching to a domain-specific model tailored to handle this environmental noise, they improved accuracy to 94% - and without additional noise-reduction filters.

Challenges for Automatic Speech Recognition (ASR) Systems

ASR systems rely on subtle acoustic cues - like formants, pitch contours, timing of syllables, and micro-pauses - to distinguish between similar-sounding words such as "mat" and "bat". When background noise obscures these cues, transcription errors multiply.

Another issue is that many ASR models are trained on clean or lightly simulated audio. When exposed to real-world audio - whether filled with natural background noise or heavily filtered - the acoustic patterns can seem unfamiliar, leading to a significant drop in accuracy.

Bridget McGillivray from Deepgram explains, "The challenge is noise: its variety, its patterns, and the way it interacts with models that were never trained to survive it".

Here’s a quick summary of how different noise types affect ASR performance:

| Noise Type | Primary Impact on ASR |

|---|---|

| Stationary (HVAC, fans) | Masks low-frequency energy and vowel formants |

| Non-Stationary (clanging, traffic) | Disrupts consonants and timing cues |

| Competing Speech (chatter, cross-talk) | Destroys spectral structure; confuses speaker identification |

| Technical (compression, packet loss) | Distorts acoustic cues and formants |

How Noise Reduction Technology Works

Noise reduction technology steps up to address the challenges posed by various background sounds. At its core, it works by analyzing audio to separate speech from unwanted noise. Traditional systems rely on Voice Activity Detection (VAD), which identifies speech and builds a noise profile during pauses. More advanced methods now employ neural networks to distinguish human speech from a wide range of background sounds.

The real challenge lies in separating speech from noise, especially when they overlap in frequency ranges. Cutting-edge algorithms like Amazon's PercepNet tackle this by using pitch-tuned comb filters, which focus on the fundamental frequency of voiced speech (like vowels) and suppress noise between the harmonics of the human voice. Other systems, such as those using Recurrent Neural Networks (RNNs) and Gated Recurrent Units (GRUs), track sound patterns over time. This allows them to differentiate speech's rhythmic qualities from the irregular or constant patterns of noise.

Common Noise Reduction Algorithms

Several algorithms are commonly used to reduce noise, each with its strengths and limitations:

- Spectral subtraction: This method estimates noise during silent moments and subtracts it from the audio signal. While it can improve the signal-to-noise ratio by 8 dB, it often increases word error rates by 15% because it can inadvertently remove parts of the speech signal.

- Beamforming: By using microphone arrays, this technique "steers" audio pickup toward the speaker, reducing interference from other directions. It works well in controlled setups but requires specific hardware configurations.

- Adaptive and Wiener filtering: These filters adjust in real time to minimize errors between the desired and actual signals. They are particularly effective for steady noise, like the hum of an HVAC system.

The biggest shift, however, has been toward deep learning-based denoising. Instead of manually filtering audio, these models are trained on vast datasets containing both clean speech and noises like traffic or crowd chatter. This approach, called multi-condition training, enables the model to focus on key features like syllable timing and harmonic structures, which stay consistent even in noisy settings. As a result, these models can reduce word error rates by 7.5% to 20% compared to those trained on clean audio alone.

"Keep all the basic signal processing that's needed anyway, but let the neural network learn all the tricky parts that require endless tweaking", says Jean-Marc Valin, lead developer of RNNoise.

Many modern systems now combine traditional signal processing with neural networks. For instance, basic tasks like VAD are handled by traditional methods, while neural networks manage complex tasks like spectral gain tuning. AI-driven models like RNNoise operate with minimal latency (as low as 10 milliseconds), and Amazon's PercepNet uses less than 5% of a single CPU core to run in real time.

Balancing Noise Suppression and Speech Clarity

One of the toughest challenges in noise reduction is maintaining a balance between suppressing noise and preserving speech clarity. Experts call this the "noise reduction paradox" - audio that sounds cleaner to humans often performs worse for automatic speech recognition (ASR) systems. Aggressive filtering can strip away subtle details such as pitch variations and micro-pauses, which are essential for distinguishing similar-sounding words.

Traditional digital signal processing assumes noise is steady and predictable, but real-world environments are full of unpredictable sounds like barking dogs or keyboard clicks. Filters designed for consistent noise often distort speech when faced with these erratic sounds. For this reason, many modern ASR systems bypass standalone noise reduction altogether. Instead, they are trained to handle raw, noisy audio directly, avoiding the "cascade errors" caused by preprocessing.

The best approach depends on the environment. For steady noise like fan hums, traditional methods still work well. But for unpredictable noise, neural networks trained on diverse datasets perform far better. Deep learning-based models consistently deliver high accuracy without needing separate preprocessing steps, reducing latency and improving transcription quality in real-world applications.

Benefits of Noise Reduction for Speech-to-Text Systems

When noise reduction is effective, it transforms speech-to-text technology into a dependable tool. The advantages are especially clear in two areas: fewer transcription errors and expanded usability across diverse environments and user groups. These improvements lead to better performance, even in the most challenging conditions.

Lower Word Error Rates in Noisy Environments

One of the most tangible benefits is the significant drop in transcription errors. Word Error Rate (WER) tends to double when the Signal-to-Noise Ratio drops from 15 dB to 5 dB. However, systems designed with noise-robust models can maintain 90%+ accuracy even in environments like factories with loud HVAC systems or call centers with overlapping conversations.

This success comes from training models on multi-condition datasets. By exposing these systems to real-world sounds - traffic, crowd chatter, and machinery noise - during development, WER reductions of 7.5% to 20% are achievable compared to models trained only on clean audio.

For instance, a healthcare startup offering HIPAA-compliant transcription saw its accuracy leap from 60% to 94% after switching to a noise-robust, domain-specific model for patient calls, which often involved ventilator noise. This kind of improvement directly impacts efficiency - every misinterpreted word can lead to billing errors, compliance risks, and customer dissatisfaction.

Better Accessibility and Usability

Noise reduction doesn’t just improve accuracy - it also makes speech-to-text systems practical in noisier environments. These systems can reliably operate in settings with background noise levels exceeding 90 dBA, making them invaluable for delivery drivers, warehouse staff, and technicians working in the field.

Enhanced noise handling also boosts the conversational flow of voice agents. By minimizing interruptions caused by ambient sounds like clinking dishes or background chatter, these systems avoid misinterpreting noise as speech. Features like Voice Activity Detection (VAD) further enhance efficiency, cutting transcription costs by 30% to 40% by removing silent segments from audio before processing.

"In production environments, accuracy is a direct driver of billing precision, compliance performance, and customer experience. Every misheard word results in operational friction." - Bridget McGillivray, Deepgram

For accessibility tools, especially those supporting individuals with disabilities, consistent performance is critical. Whether users are at home, in the office, or in public spaces with air conditioning or street traffic, reliable speech-to-text functionality ensures these tools remain practical for everyday use.

sbb-itb-df9dc23

Implementing Noise Reduction for Better Results

If you want more accurate transcriptions, paying attention to noise reduction strategies is key. Here's how to get started.

Choose Tools Built for ASR Optimization

Stick with ASR systems designed to handle noisy, real-world audio. Modern engines are trained to recognize speech even in challenging environments - like bustling streets, HVAC noise, or overlapping conversations. Adding extra noise filters might seem helpful, but it can actually hurt performance. As Google Cloud's documentation explains:

"Applying noise-reduction signal processing to the audio before sending it to the service typically reduces recognition accuracy. The service is designed to handle noisy audio".

Turn off external noise reduction and automatic gain control (AGC) in your recording setup. These ASR systems already use advanced algorithms to separate speech from background noise. Introducing additional filters can strip away subtle acoustic details - like pitch changes or brief pauses - that neural networks rely on for precise transcription. Once you've adjusted your setup, test it to ensure it works well in real-world scenarios.

Test Performance in Your Actual Environment

The best tools are only as good as their performance in your specific environment. To verify their effectiveness, record audio in the conditions where you'll use the system - whether that's a noisy warehouse, a moving car, or a home office with street noise. For reliable results, collect 30 minutes to 3 hours of audio for testing.

Evaluate transcription accuracy across different Signal-to-Noise Ratio (SNR) levels. Why? Because every 5 dB drop in SNR can double your Word Error Rate. Testing at SNR levels like 15 dB, 10 dB, 5 dB, and 0 dB will help you pinpoint the "accuracy cliff" - the point where transcription quality starts to fall apart. Always use mono recordings with a minimum sample rate of 16,000 Hz in lossless formats like FLAC or LINEAR16 to retain essential audio details.

Use Advanced Features in Modern Tools

Take advantage of modern transcription platforms that include built-in noise handling. Services like Revise process audio without requiring you to manually configure noise reduction tools. This eliminates the guesswork and ensures that the ASR system gets audio in the format it works best with.

For batch transcription projects, enable Voice Activity Detection (VAD) to automatically cut out silent sections before processing. This can reduce transcription costs by 30% to 40%. Also, if your work involves specialized vocabulary - like medical terms, legal jargon, or technical phrases - use keyword boosting features. These can improve recognition accuracy for domain-specific words by 5 to 15 percentage points.

Measuring Improvements in Speech Accuracy

Compare Word Error Rates Before and After Noise Reduction

The Word Error Rate (WER) is the go-to metric for assessing how noise reduction impacts the accuracy of speech-to-text systems. It’s calculated by adding up substitutions, deletions, and insertions, then dividing that total by the number of words in the reference transcript. A lower WER means better accuracy.

To get accurate results, you’ll need a "ground truth" file, which is a 100% correct, human-created transcript. Before calculating WER, normalize both transcripts by standardizing their format - convert everything to lowercase, remove unnecessary punctuation, and handle numbers consistently. Skipping this step can inflate WER by as much as 90% due to minor formatting discrepancies.

Here’s an example: In March 2022, Nishit Kamdar, a Data and AI Specialist at Google, worked with an asset management company to improve transcription accuracy for video testimonials. Initially, the system had a WER of 37.88% (around 62% accuracy) because of background noise and configuration issues. After five optimization rounds - adjusting language settings, applying phrase hints, and boosting proper nouns - the WER dropped to just 3%, reaching an impressive 97% accuracy.

This approach demonstrates how WER serves as a reliable metric to track improvements in transcription accuracy after noise reduction.

Analyze Cleaned vs. Raw Audio Transcriptions

Beyond WER, another effective strategy is comparing transcription outputs directly. Start by transcribing the same audio twice: once as-is (raw) and once after applying noise reduction. Then, compare both outputs against a human-generated, 100% accurate transcript. Using this reference is critical - comparing two ASR-generated transcripts only highlights differences, not which one is more accurate.

For reliable results, test with 30 minutes to 3 hours of audio that includes at least 800 spoken utterances. A method shows clear improvement if it produces a lower WER in at least 429 out of 800 utterances. To dig deeper, break down the errors - substitutions, deletions, and insertions. For instance, a significant drop in deletions suggests the system is now picking up words that were previously lost in the noise.

This dual approach - WER analysis and direct transcript comparison - provides a comprehensive view of how noise reduction impacts transcription accuracy.

Conclusion

Getting noise reduction right is a game-changer for speech-to-text accuracy, and the secret lies in working with raw, unfiltered audio. Modern ASR systems thrive on unprocessed input, as pre-filtered signals can actually hurt their performance. As Bridget McGillivray from Deepgram explains:

"If your model never learned to survive chaos, cosmetic cleaning won't rescue it, and may be what kills performance".

The link between signal-to-noise ratio and transcription quality is clear: as noise increases, accuracy drops. This makes capturing audio in its raw form essential. Models trained on noisy data are better equipped to handle real-world audio challenges, eliminating the need for heavy pre-processing.

To boost your ASR performance, focus on three practical steps. First, ensure you're capturing high-quality audio by placing microphones close to the speaker and using lossless codecs like FLAC or LINEAR16. Second, skip external noise filters and send raw audio directly to modern ASR APIs - these systems are built to handle noisy signals effectively. Third, test your setup with recordings from real-world environments instead of sanitized test files.

Balancing noise suppression with clear speech is a recurring theme. Real-world results prove its importance: in November 2025, CVS Health processed over 140,000 pharmacy calls per hour with 92% accuracy by using models trained on noisy data and avoiding preprocessing pipelines. They also maintained sub-300ms latency for their voice agents. Similarly, a healthcare startup saw accuracy jump from 60% to 92% while halving latency by switching to a domain-trained model that handled ventilator noise seamlessly.

For those using tools like Revise, applying these principles will lead to cleaner, more accurate transcriptions. The future of speech recognition isn’t about filtering out the noise - it’s about building smarter systems that work with the full audio signal.

FAQs

How do neural networks enhance noise reduction in speech-to-text systems?

Neural networks play a key role in improving noise reduction for speech-to-text systems by leveraging advanced algorithms to process and analyze audio data. These models are specifically trained to differentiate between spoken words and background noise, enabling them to minimize unwanted sounds while maintaining the clarity of the speaker's voice.

This capability is particularly useful in noisy settings, where it enhances transcription accuracy by isolating the speaker's voice from surrounding noise. As these systems learn from a wide range of audio samples, they become better equipped to handle various scenarios, making speech-to-text technology more dependable and efficient.

How does using raw audio impact speech-to-text accuracy?

Using raw audio can enhance speech-to-text accuracy because it retains critical acoustic details that might otherwise be lost during noise reduction. While noise reduction is great for filtering out background sounds, it can unintentionally strip away subtle audio features that are crucial for accurate transcription.

To achieve the best results, it’s essential to determine whether your system performs better with raw, unprocessed audio or noise-reduced input. Many modern speech recognition models are built to process raw audio effectively, delivering higher accuracy even in challenging environments.

How can I evaluate my speech-to-text system in noisy conditions?

To see how well your speech-to-text system performs in noisy situations, try testing it with audio recordings that feature different kinds of background noise - like chatter, traffic sounds, or music. This approach gives you a sense of how accurate and reliable the system is in everyday conditions.

For even better results, consider fine-tuning the system's acoustic model using audio data that includes background noise. This adjustment can help the system adapt to tough environments and provide more dependable outcomes.